bagging machine learning ensemble

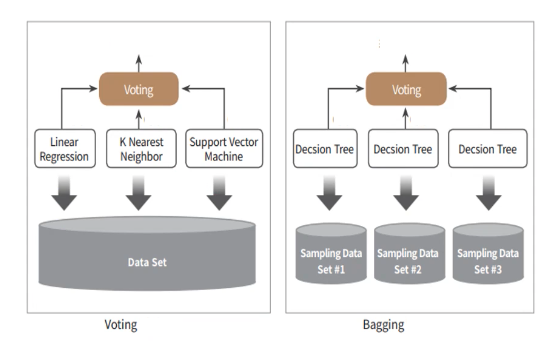

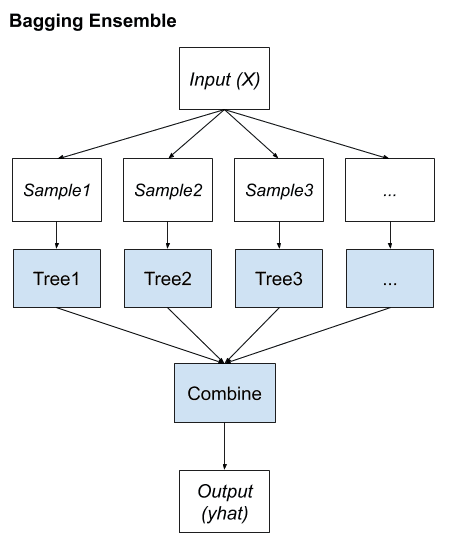

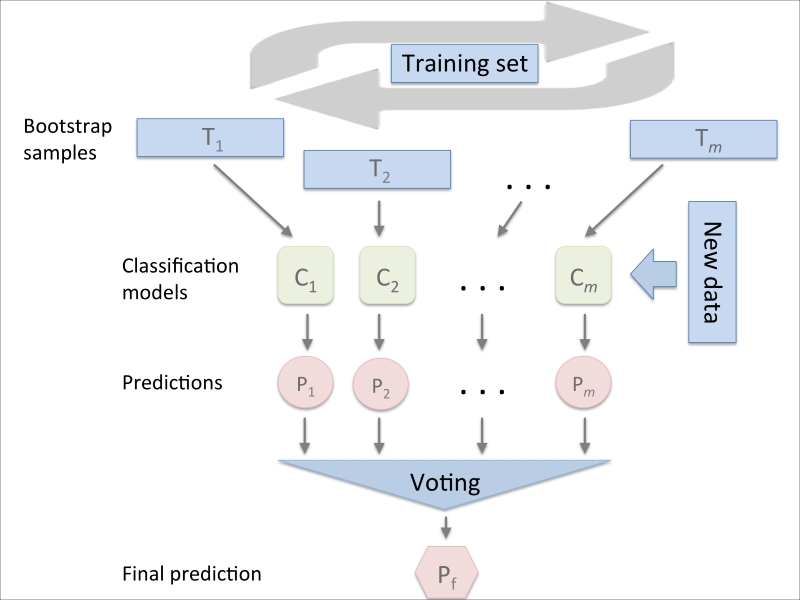

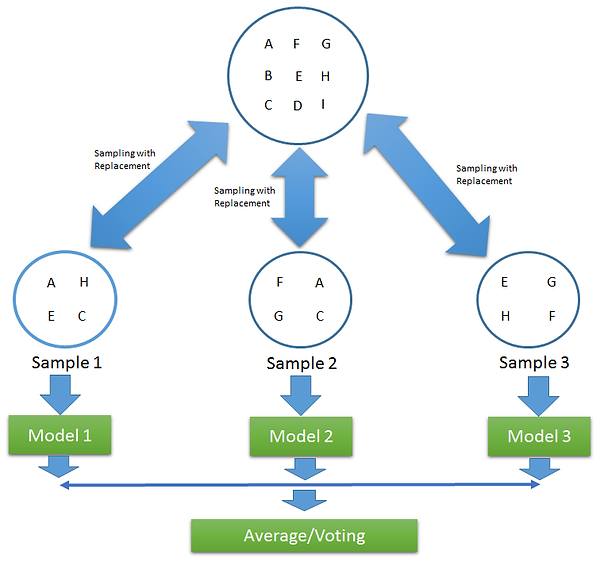

B ootstrap A ggregating also known as bagging is a machine learning ensemble meta-algorithm designed to improve the stability and accuracy of machine learning algorithms. An ensemble consists of a set of individually trained base learnersmodels whose predictions are combined when classifying new cases.

Machine Learning Basics Ensemble Learning Bagging Boosting Stacking Youtube

Previous researches have shown that.

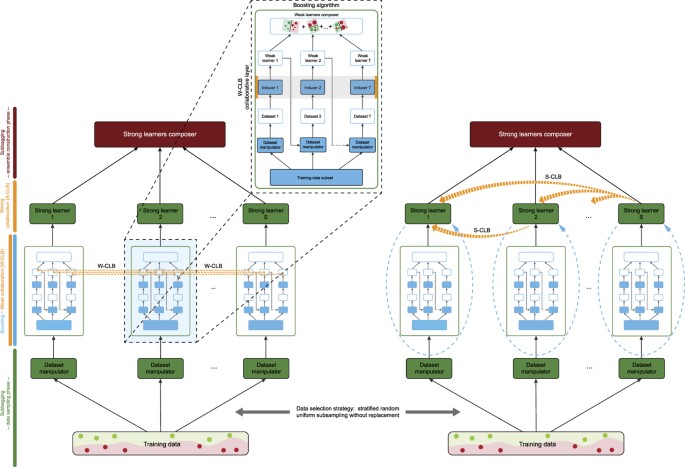

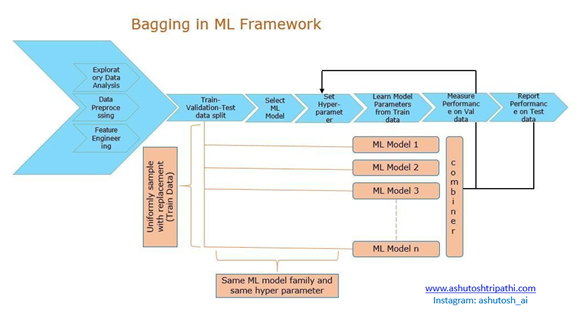

. Myself Shridhar Mankar a Engineer l YouTuber l Educational Blogger l Educator l Podcaster. Compared to using a simple bagging ensemble framework we propose a fusion bagging-based ensemble framework FBEF that uses 3 weak learners in. As base learners the optimal regression tree RT and extreme learning machine ELM are integrated into four ensemble strategies ie bagging BA boosting BO random.

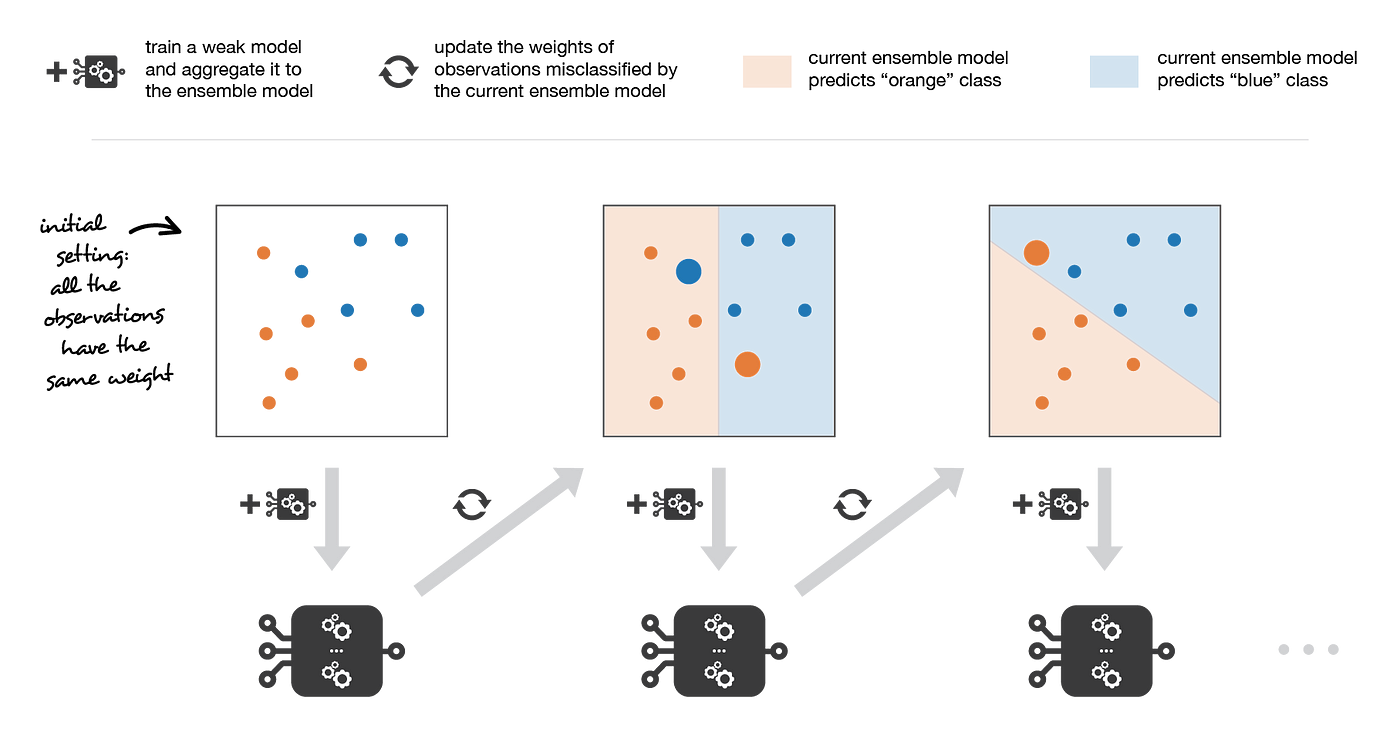

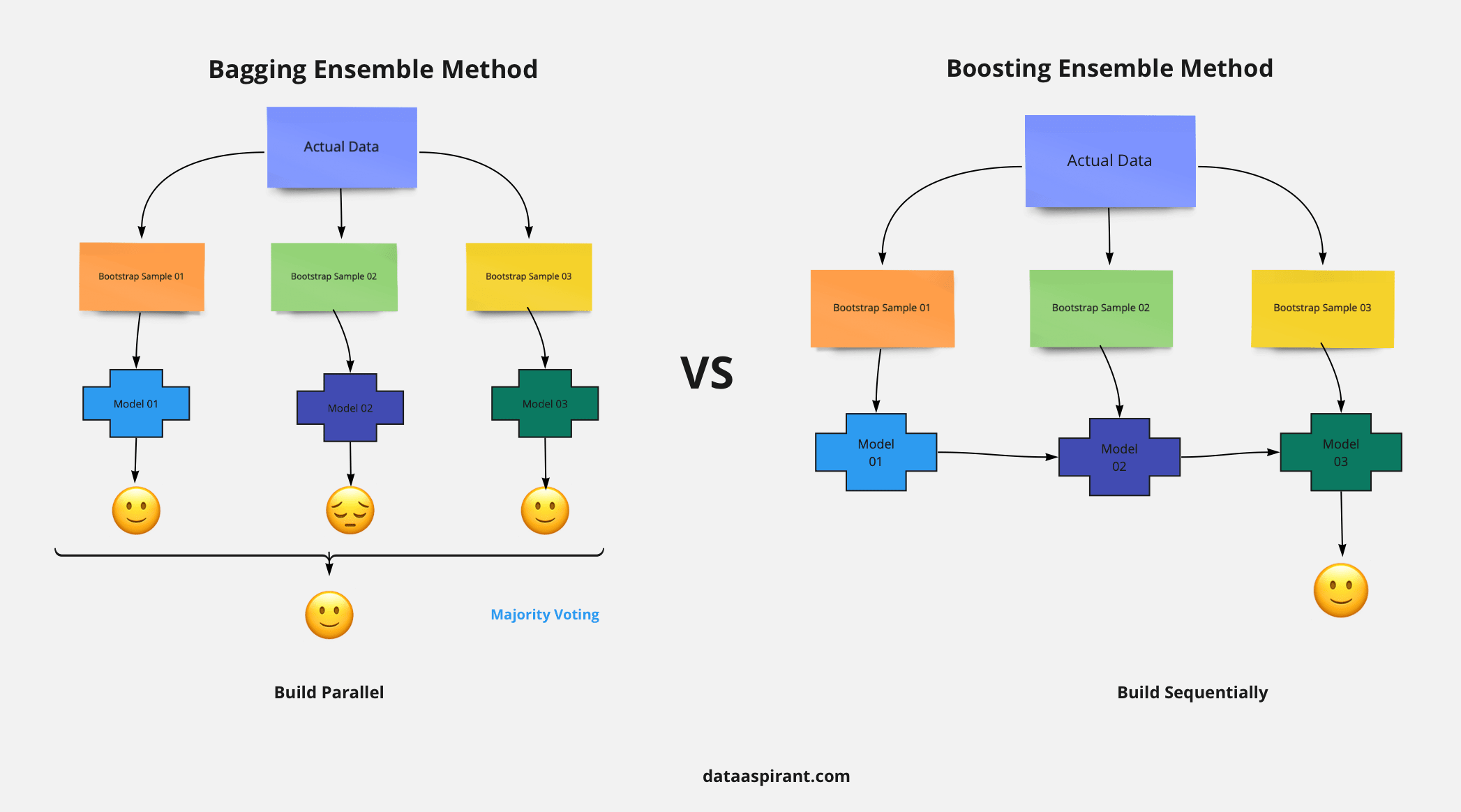

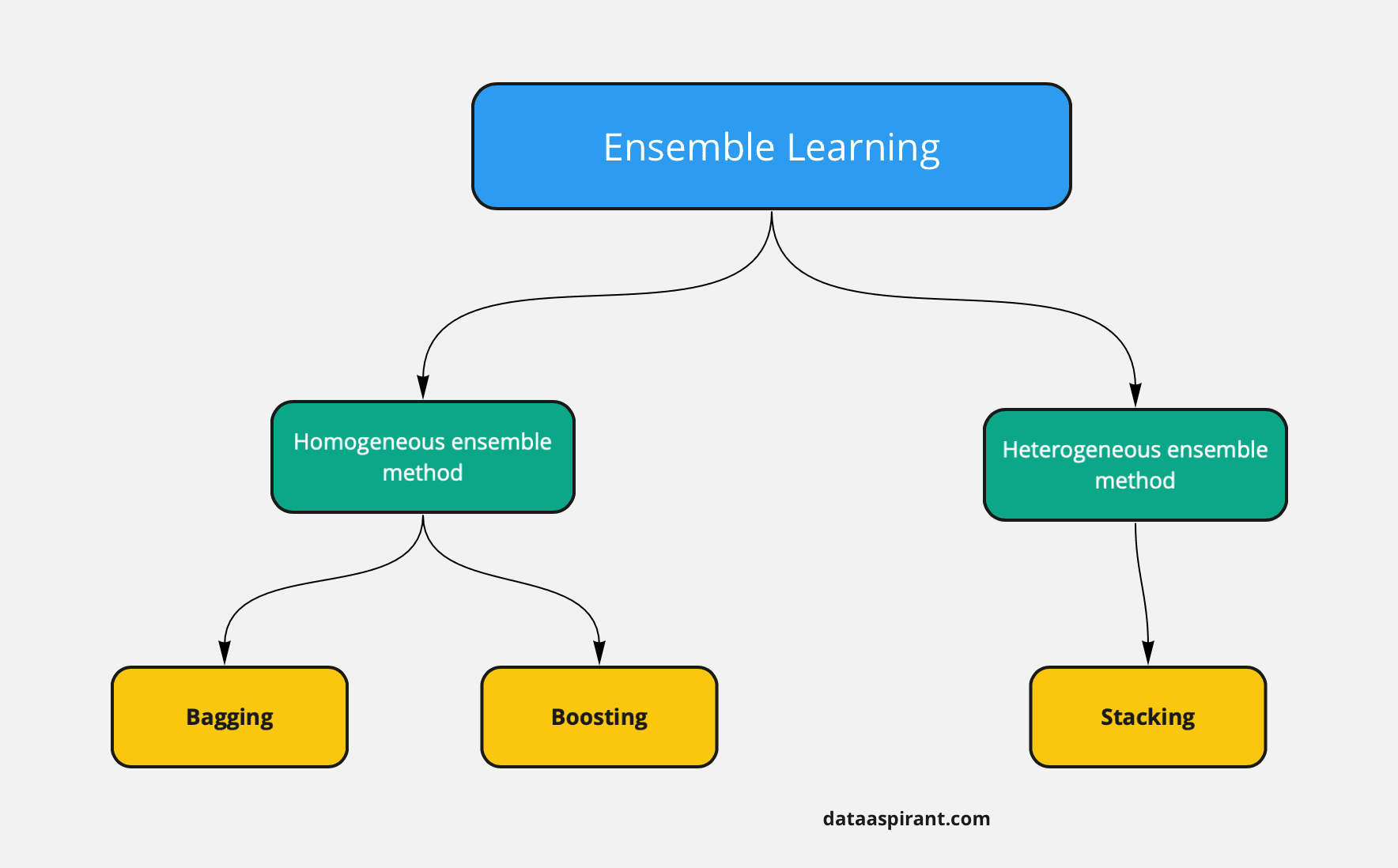

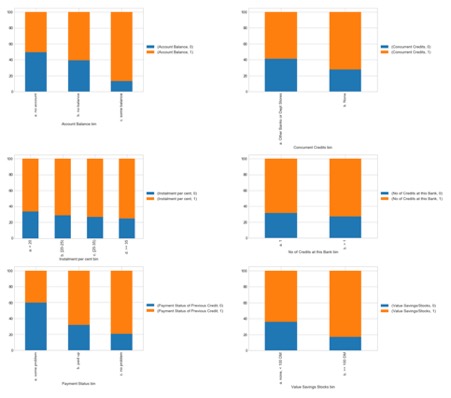

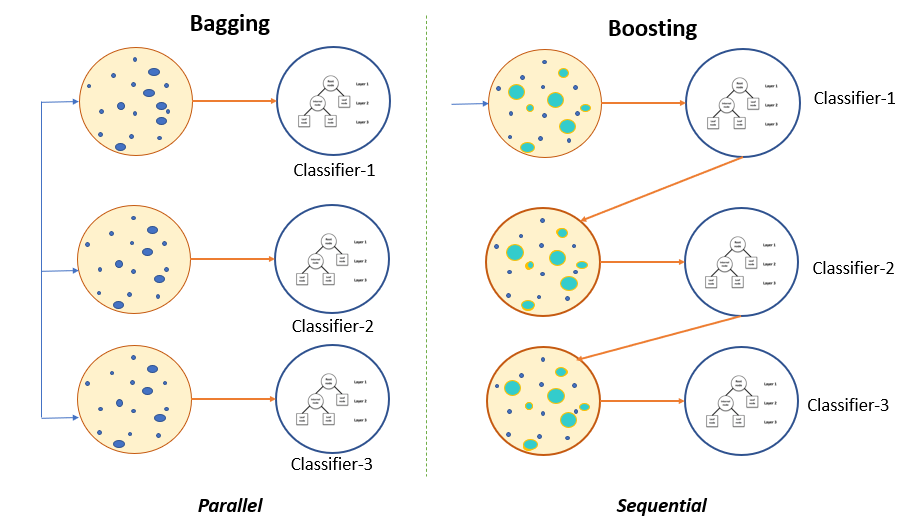

Bagging and Boosting are ensemble methods focused on getting N learners from a single learner. Ensemble machine learning can be mainly categorized into bagging and boosting. The bagging technique is useful for both regression and statistical classification.

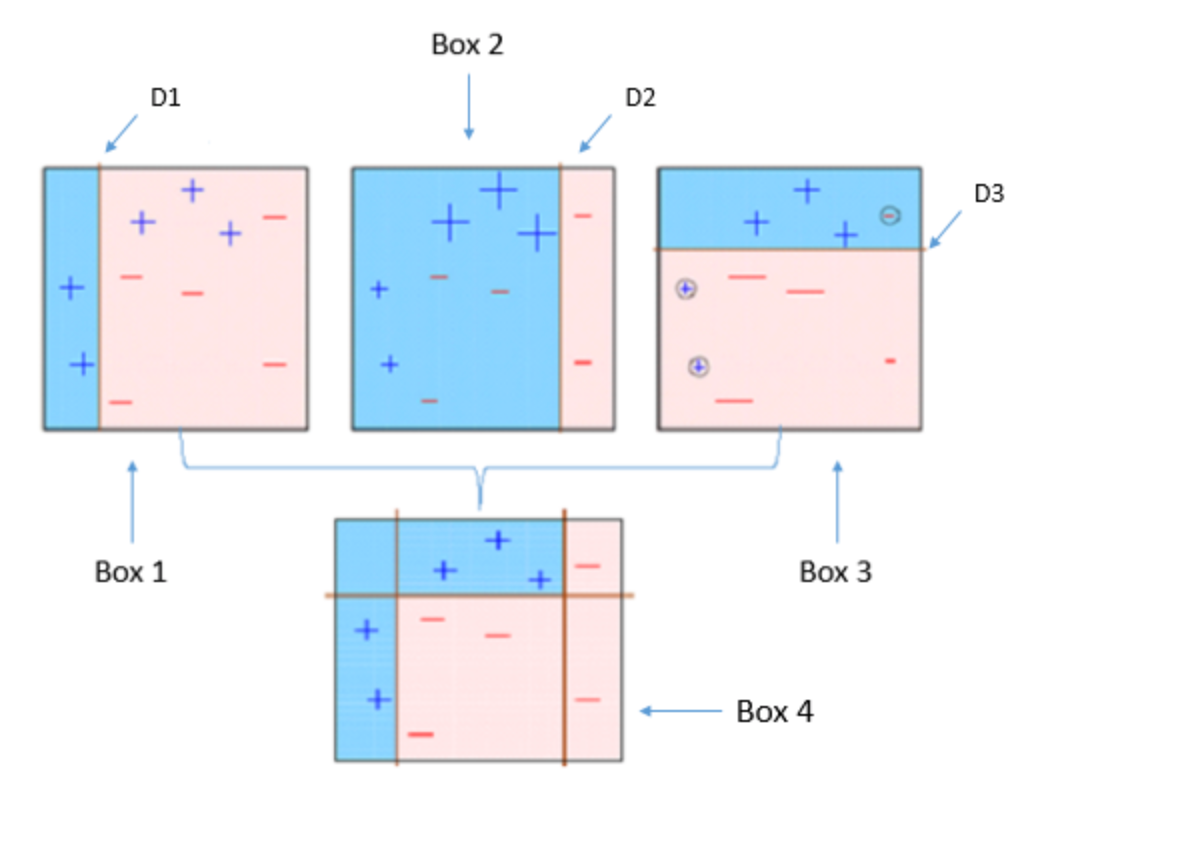

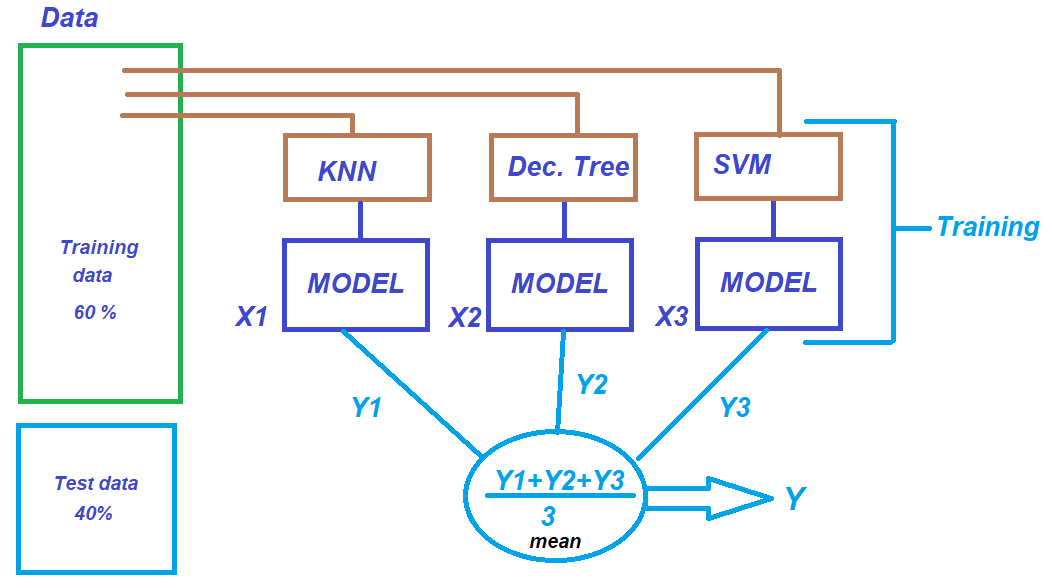

Ensemble learning is all about using multiple models to combine their prediction power to get better predictions that has low variance. A Bagging classifier is an ensemble meta-estimator that fits base classifiers each on random subsets of the original dataset and then aggregate their individual predictions either by voting. The ensemble learning approach.

The bagging technique is useful for both regression and statistical classification. The main takeaways of this post are the following. Ensemble learning is a machine learning paradigm where multiple models often called weak learners or base models are.

Bagging means bootstrapaggregating and it is a ensemble method in which we first bootstrap our data and for each bootstrap sample we train one model. Bootstrap aggregating also called bagging from b ootstrap agg regat ing is a machine learning ensemble meta-algorithm designed to improve the stability and accuracy of machine learning. Bagging and boosting.

Bagging and Boosting make random sampling and generate several training. Machine learning is a sub-part of Artificial Intelligence that gives power to models to learn on their own by using algorithms and models without being explicitly designed by. My Aim- To Make Engineering Students Life EASYWebsite - https.

Bagging Bagging a Parallel ensemble method stands for Bootstrap Aggregating is a way to decrease the variance of the prediction model by generating additional data in the. Ensemble machine learning can be mainly categorized into bagging and boosting.

A Primer To Ensemble Learning Bagging And Boosting

Ensemble Methods Bagging Vs Boosting Difference

Bagging Ensemble Meta Algorithm For Reducing Variance By Ashish Patel Ml Research Lab Medium

Ensemble Learning Bagging And Boosting By Jinde Shubham Becoming Human Artificial Intelligence Magazine

Introduction To Ensemble Learning Bagging And Boosting Youtube

Ensemble Learning Voting And Bagging

Generating Highly Accurate Prediction Hypotheses Through Collaborative Ensemble Learning Scientific Reports

Ensemble Learning Bagging Boosting Experfy Insights

A Gentle Introduction To Ensemble Learning Algorithms

Ensemble Methods Bagging Vs Boosting Difference

Machine Learning Algorithms Ensemble Methods Bagging Boosting And Random Forests By Nadir Tariverdiyev Medium

A Primer To Ensemble Learning Bagging And Boosting

Bagging Building An Ensemble Of Classifiers From Bootstrap Samples Python Machine Learning Book

The Working Techniques Of Ensemble Process 1 Bagging Technique Download Scientific Diagram

Ensemble Learning With Python Sklearn Datacamp

Understanding Ensemble Method Bagging Bootstrap Aggregating With Python

Ensemble Machine Learning Techniques The Course Overview Packtpub Com Youtube

What Is Bagging In Ensemble Learning Data Science Duniya

Ensemble Methods In Machine Learning Bagging Versus Boosting Pluralsight